In this post you will discover the effect of the learning rate in gradient boosting and how to. XGBoost is short for eXtreme Gradient Boosting package.

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

It defines the flow of information within the system.

. The purpose of this Vignette is to show you how to use XGBoost to build a model and make predictions. It is an efficient and scalable implementation of gradient boosting framework by friedman2000additive and friedman2001greedy. An Information system is a combination of hardware and software and telecommunication networks that people build to collect create and distribute useful data typically in an organization.

A problem with gradient boosted decision trees is that they are quick to learn and overfit training data. The objective of an information system is to provide appropriate information to the user to gather the data. XGBoost R Tutorial Introduction.

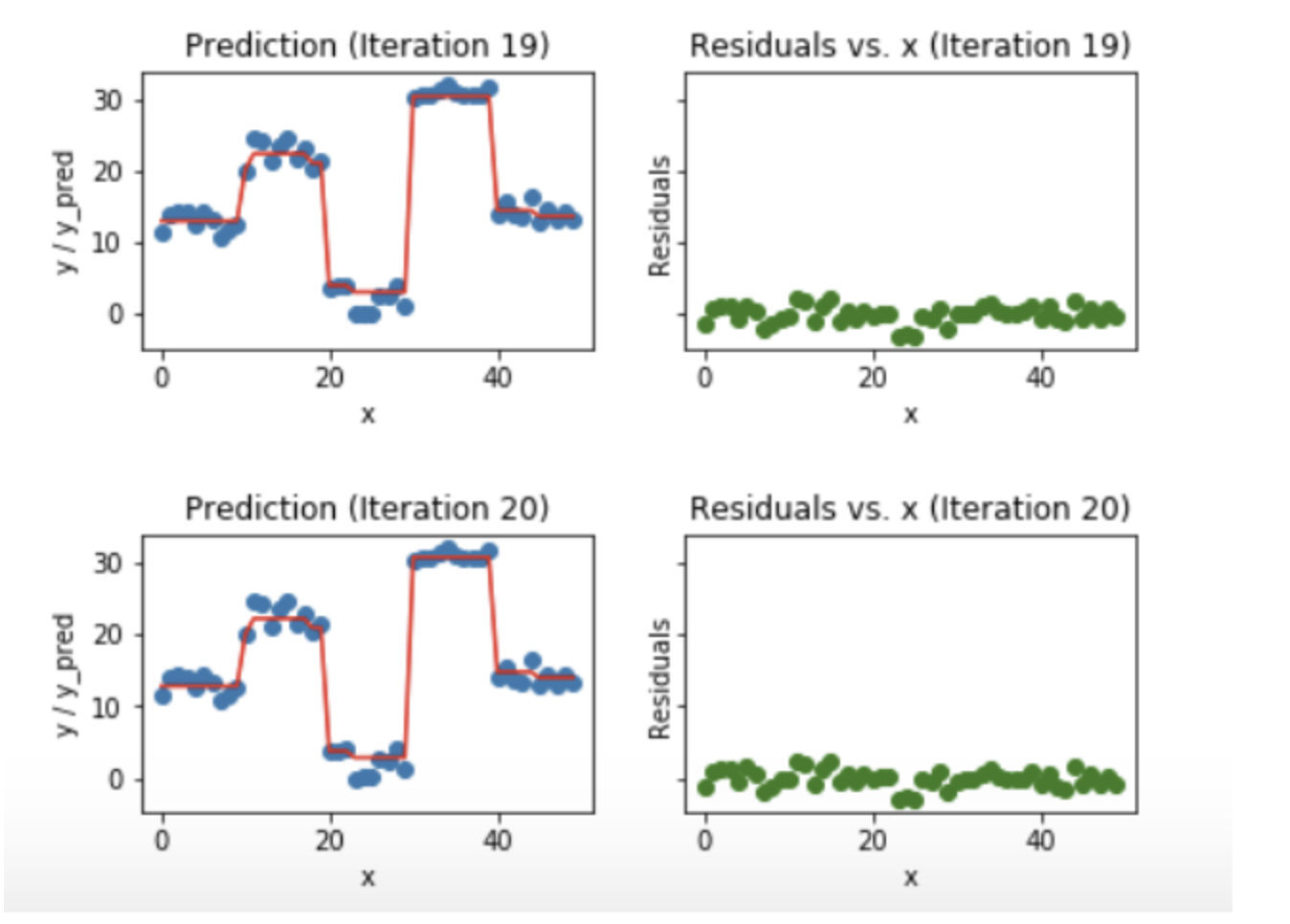

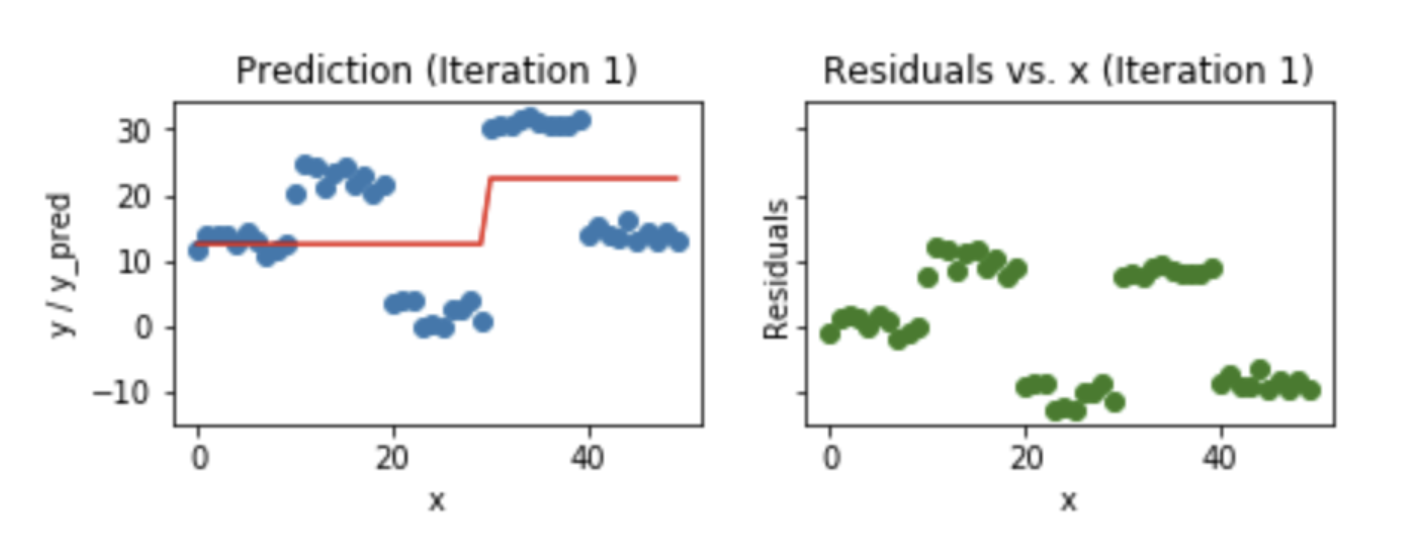

One effective way to slow down learning in the gradient boosting model is to use a learning rate also called shrinkage or eta in XGBoost documentation.

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

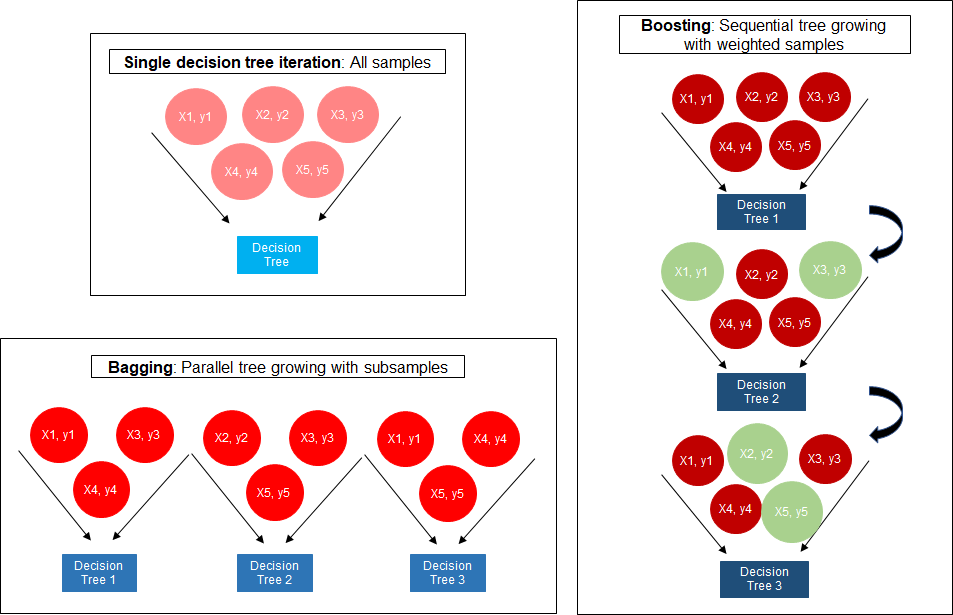

The Structure Of Random Forest 2 Extreme Gradient Boosting The Download Scientific Diagram

Boosting Algorithm Adaboost And Xgboost

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Hackernoon

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

0 comments

Post a Comment